What Is On-Chip Liquid Cooling? Benefits, Challenges, and Use Cases

What Is On-Chip Liquid Cooling?

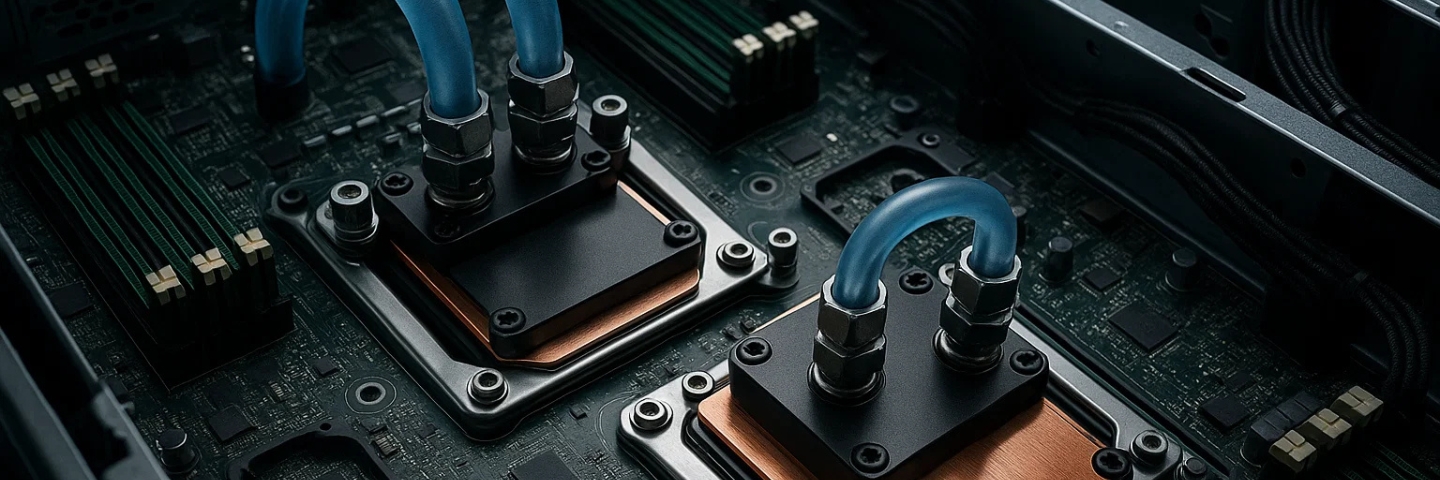

On-chip liquid cooling is a direct-to-chip (D2C) thermal management solution that removes heat at its source: the processor. In this system, cold plates are mounted directly onto the CPUs and GPUs inside a server. A liquid coolant — typically water or a dielectric fluid — flows through these plates, absorbing the heat generated by the components and carrying it away through a closed loop.

The heated liquid is then cooled by a Coolant Distribution Unit (CDU) or heat exchanger and returned to the loop. The entire system operates independently of traditional room-level air cooling and is engineered to manage extreme thermal loads — making it particularly suitable for AI, machine learning, and high-performance computing (HPC) environments.

Why It’s Gaining Traction

Modern processors used in AI training, real-time inference, or large-scale simulations can consume over 1000 watts per chip. Air simply can't remove that much heat efficiently without creating enormous airflow demands, noise, and energy consumption.

On-chip liquid cooling brings the coolant directly to the heat source, enabling much more effective thermal transfer and allowing servers to operate at peak performance — even under heavy workloads.

This precision cooling approach is already in use in some of the world's most advanced data centers and is rapidly becoming the standard for racks exceeding 50–100 kW, particularly in AI-driven designs.

Key Benefits

Unmatched Cooling Density: Supports servers running at 100–150 kW per rack and more, depending on the number of processors and configuration.

- Improved Hardware Performance: Cooler chips perform better and last longer. On-chip cooling ensures thermal throttling is minimized, allowing CPUs and GPUs to run at full speed.

- Energy Efficiency: Liquid is far more efficient than air at absorbing heat — up to 4,000 times more efficient. This translates into lower fan power usage and better Power Usage Effectiveness (PUE).

- Reduced Room Cooling Load: Since most of the heat is captured at the source, the need for air conditioning in the room is reduced.

- Closed-Loop System: Most solutions are fully sealed, reducing evaporation and minimizing risk. There’s no immersion or exposure of electronics to liquid.

Challenges and Considerations

While on-chip liquid cooling delivers unmatched thermal performance, it's a step beyond traditional data center design — and requires planning, investment, and operational change.

- CDU Infrastructure Required: A Coolant Distribution Unit (CDU) is essential for managing temperature, flow rate, and pressure. This adds equipment to the rack or row and requires space and maintenance.

- Air Cooling Still Needed: Only chips are cooled directly. Memory, storage, and networking components still rely on airflow, often handled by rear-door heat exchangers (RDHx) or traditional cooling setups.

- Integration with IT Hardware: Not all servers are liquid-ready. Choosing OEM hardware with factory-installed cold plates is often required, which can limit flexibility.

- Leak Management and Safety: While rare, leaks must be accounted for with monitoring, detection systems, and service protocols. Quick-disconnect fittings and fail-safes are critical.

- Training and Operational Readiness: Staff need to be familiar with liquid infrastructure, including commissioning, maintenance, and emergency response.

- Cost and ROI: On-chip cooling is more capital-intensive upfront than air-based methods. However, the long-term ROI comes from energy savings, density gains, and enabling premium workloads.

Ideal Use Cases

AI Training Clusters: These often run thousands of watts per chip, with heat densities beyond the capability of air-based cooling.

- HPC and Scientific Computing: On-chip cooling is ideal for systems where compute density and performance take precedence over cost.

- Greenfield Builds: In new facilities, on-chip systems can be integrated from the start for maximum efficiency and space utilization.

- Sustainability-Focused Operators: Organizations pursuing heat reuse, water savings, or net-zero targets benefit from the efficiency and closed-loop water management.

Conclusion

On-chip liquid cooling is no longer niche — it’s a necessity for the next generation of data centers. As AI models grow larger and compute needs more intense, D2C cooling unlocks the ability to scale without overheating infrastructure or skyrocketing energy costs.

By cooling processors at the source, it delivers performance, density, and sustainability benefits that traditional systems can’t match. For operators building AI-ready data centers, on-chip cooling is not just a solution — it’s the foundation.

Canada

Canada

Latin America (English)

Latin America (English)

Latin America (Espanol)

Latin America (Espanol)

USA

USA

China

China

India

India

Japan

Japan

Republic of Korea

Republic of Korea

South East Asia (English)

South East Asia (English)

Austria

Austria

Belgium

Belgium

France

France

Germany

Germany

Italy

Italy

Netherlands

Netherlands

Spain

Spain

Switzerland

Switzerland

Turkey

Turkey

UK

UK

Africa (english)

Africa (english)

Africa (français)

Africa (français)

Middle East (english)

Middle East (english)

Australia

Australia

New Zealand

New Zealand